Statistical analysis of quasar spectra at the University of Washington (UW)

At UW I worked with Professor Matthew McQuinn to study the large-scale evolution of the universe. This experience established a foundation for data science moving forward. I first acquired domain knowledge via literature reviews and advice from leading experts. Then I used this knowledge to inform an interesting and novel project goal. I collected large datasets from an astronomical observatory, developed a pipeline in Python to process this data, and then applied advanced statistical methods to derive meaningful insights from the data. From these insights we better constrained the thermal evolution of the universe in a way that has never been done before. I published this work in the peer-reviewed journal, Monthly Notices of the Royal Astronomical Society. Read my paper here.

Modeling Emissions from Cosmological Phenomena (I-fronts), UCR

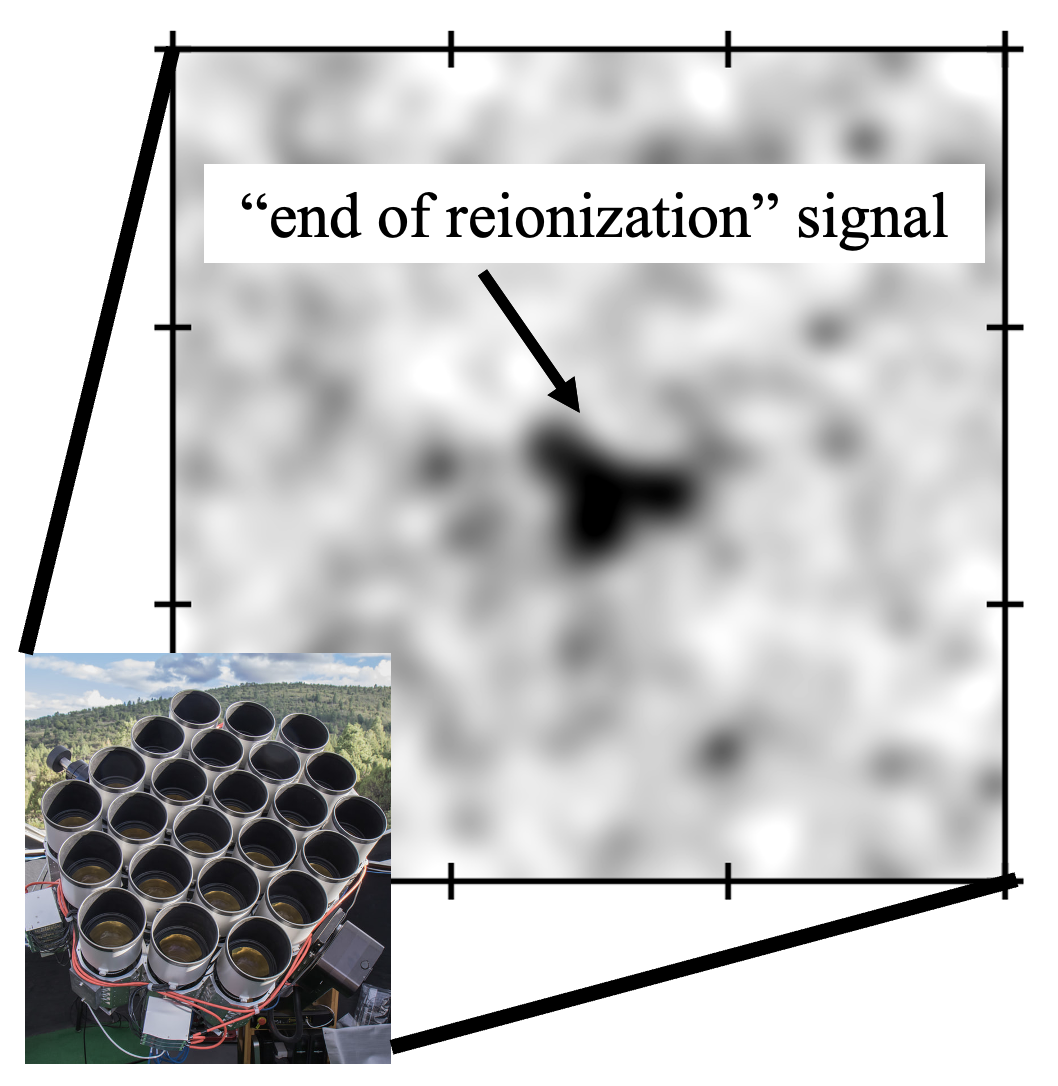

Upon gradutating with a BSc in astrophysics from UW, I pursued a PhD in physics from the University of California, Riverside. During my PhD, I worked on two classes of projects that uniquely add to my data scientist skillset: (1) modeling emissions from cosmological phenomena and (2) artificial intelligence assisted super-resolution cosmological simulations. In project 1, I implemented dimensionality reduction techniques and extensive validation testing to produce a reliable model that reduces computational cost by a factor of 10,000. This model was then used to generate realistic mock images that inform the stakeholders (observational astronomers) on current and future observational prospects. We found that end of reionization (a pivotal moment in cosmic history that has never been directly detected) is likely detectable with a dedicated program. See figure 2 for an example mock image. This project resulted in 2 first-authored publications. Read these papers here.

Artificial Intelligence Assisted Cosmological Simulations, UCR

In project 2 of my PhD, I moved towards the application of generative artificial intelligence (AI) to assist cosmological simulations. I led a deep learning based project to super-resolve 3-dimensional cosmological simulations to 8 times higher resolution over a billion years of cosmic evolution. For example, I show the time evolution of the low and high resolution training pairs that I created in figure 3 and 4 respectively. The enormous spatial dynamic range would allow simulations simultaneously model small scales (think small, faint galaxies) as well as the large scales (think rare sources like massive, bright galaxies). I used the Globus data transfer tool to move massive Tb-size cosmological simulation data files and then preprocessed these simulations in preparation for model training. Then I trained a generative-adversarial DL model (styleGAN) written using Pytorch on GPU nodes of the Frontera supercomputer, the fastest academic supercomputer in the United States . I monitored the training of the generator and discriminator using Tensorboard to ensure proper progress was being made. I decided to graduate before completing this project but I have made significant progress in preprocessing and early model training. I have trained other researchers that will complete this project in the near future.